InstructTime++ · Preprint

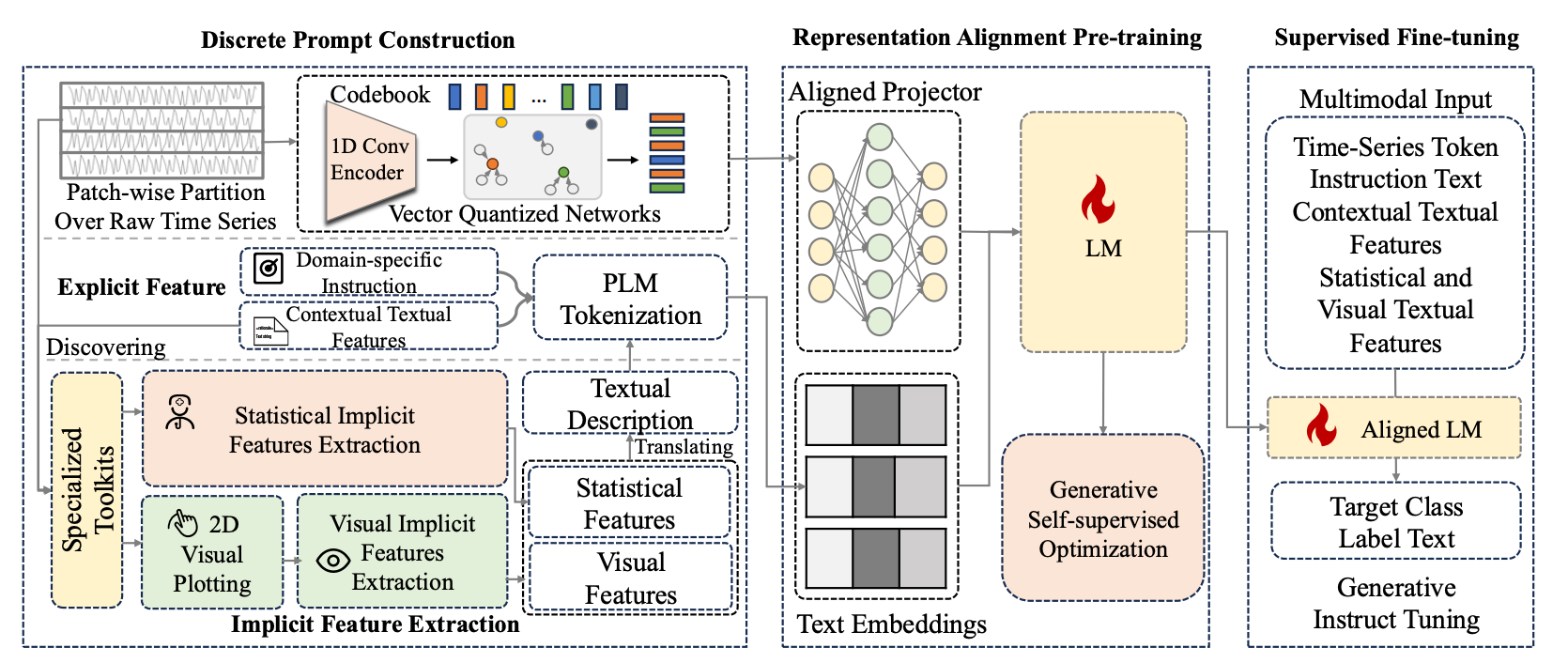

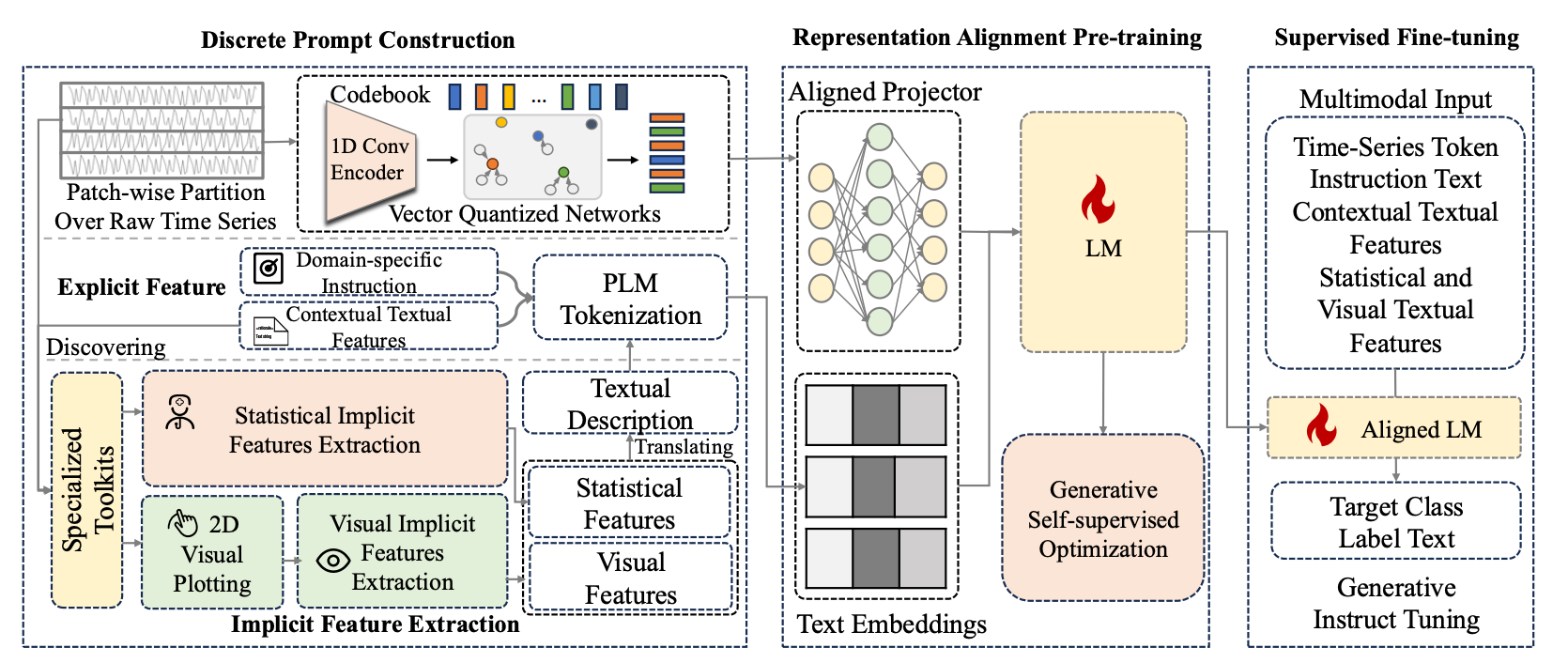

Extends InstructTime with implicit feature enhancement via statistical feature extraction and vision-language-based image captioning for superior classification performance.

Paper →Time Series Initiative

We develop cutting-edge methods for time series classification and forecasting, leveraging multimodal language modeling, self-supervised learning, and reasoning-based approaches to push the boundaries of temporal data understanding.

Research Projects

Our research spans multiple directions: multimodal language modeling for classification, reasoning-based forecasting, self-supervised representation learning, and comprehensive surveys.

Extends InstructTime with implicit feature enhancement via statistical feature extraction and vision-language-based image captioning for superior classification performance.

Paper →

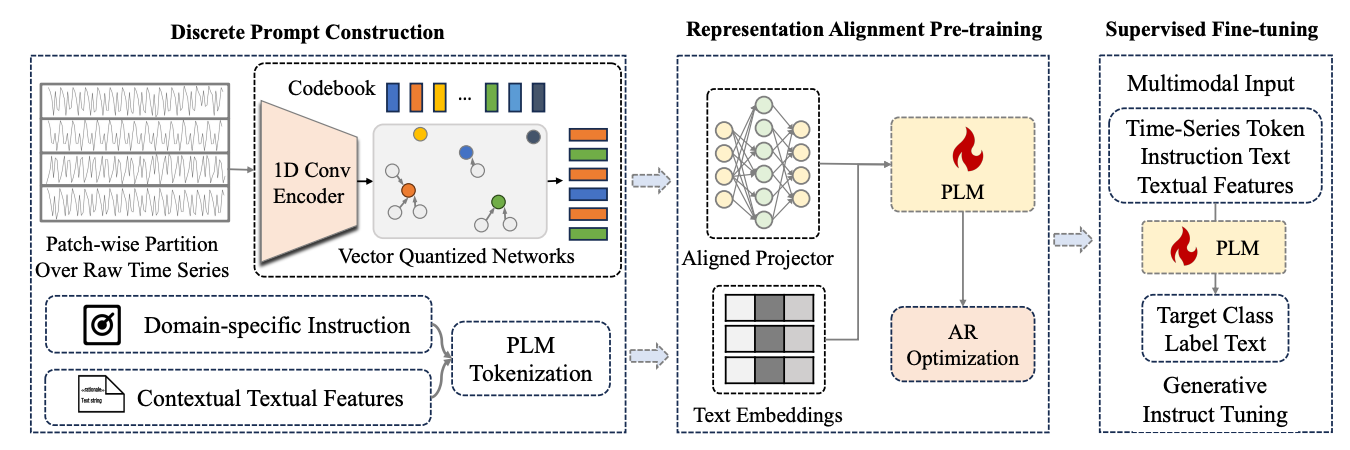

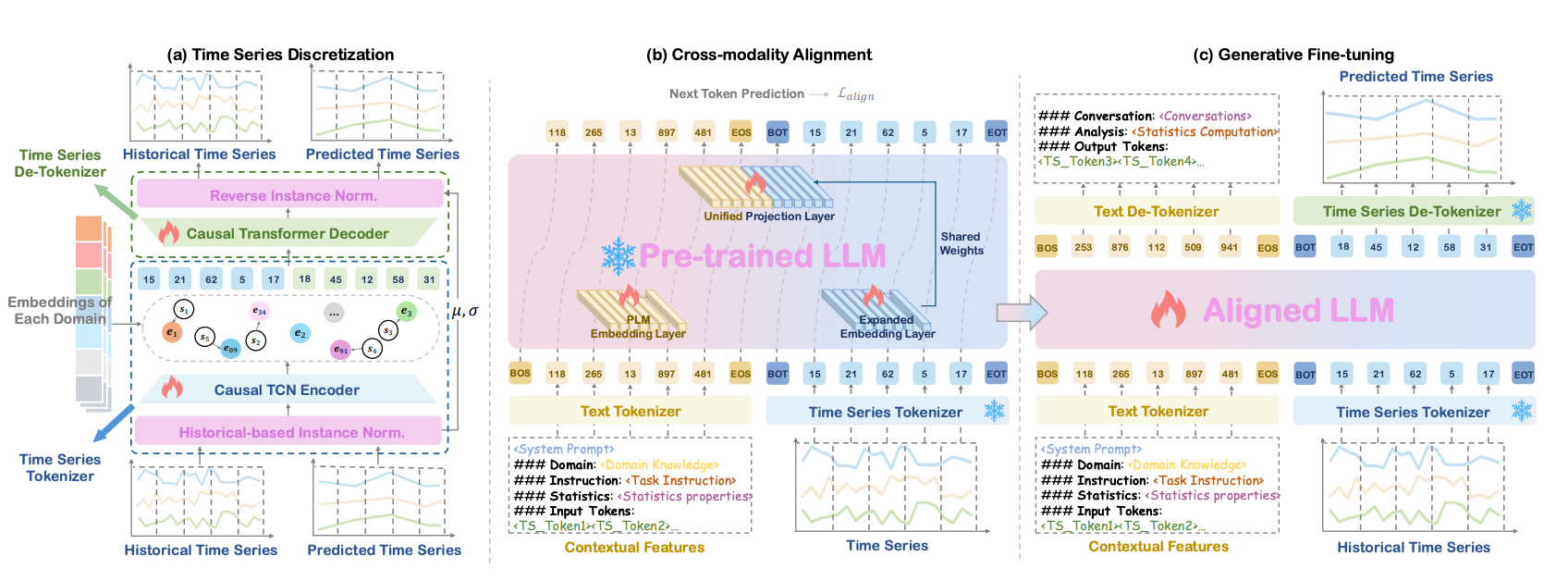

Reformulates time series classification as a multimodal generative task, converting continuous sequences into discrete tokens and leveraging pre-trained language models.

Paper →

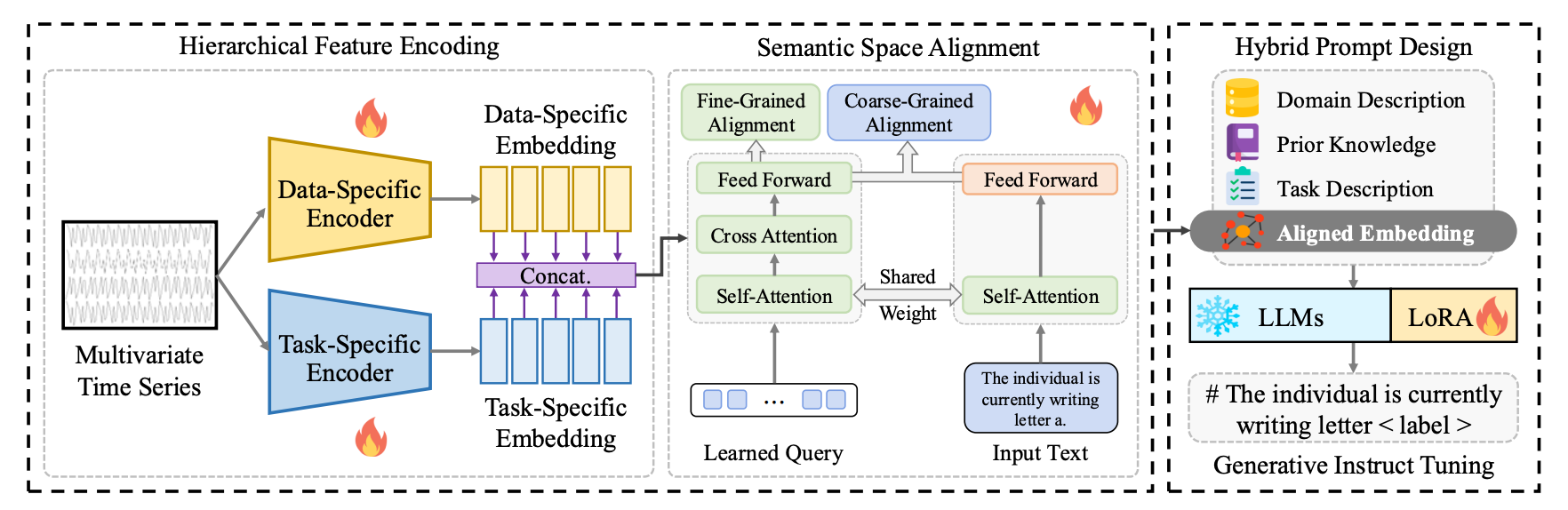

Hierarchical multimodal LLMs with semantic space alignment for time series classification: hierarchical sequence encoding, coarse-to-fine cross-modal alignment, and generative classification via parameter-efficient fine-tuning.

Paper →

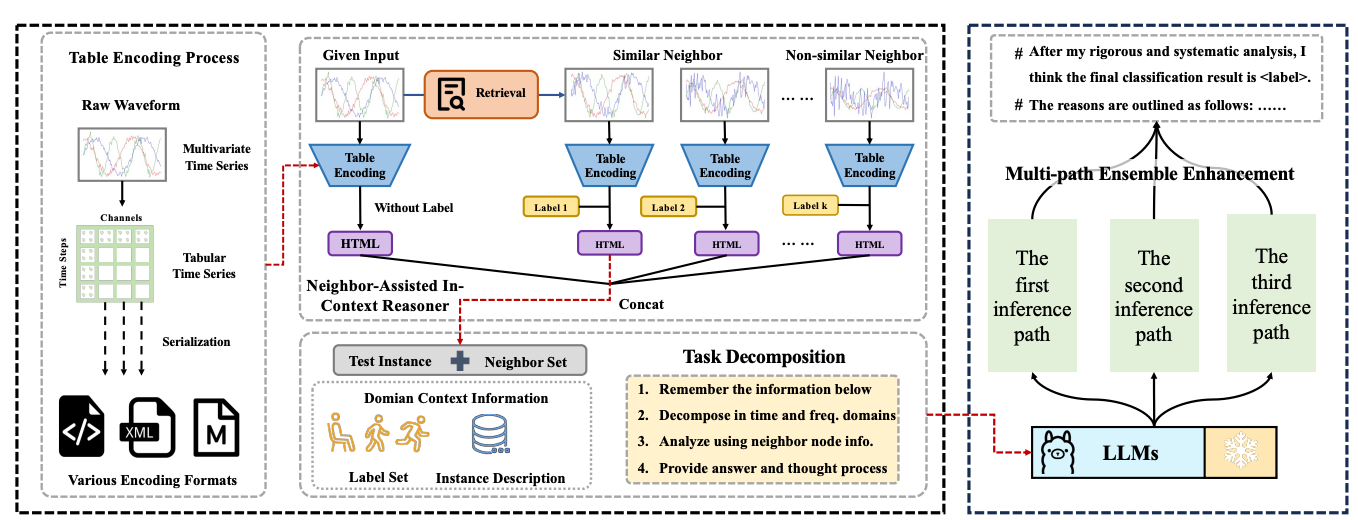

Reformulates multivariate time series classification as zero-shot table understanding via large language models, minimizing information loss through tabular representation.

Paper →

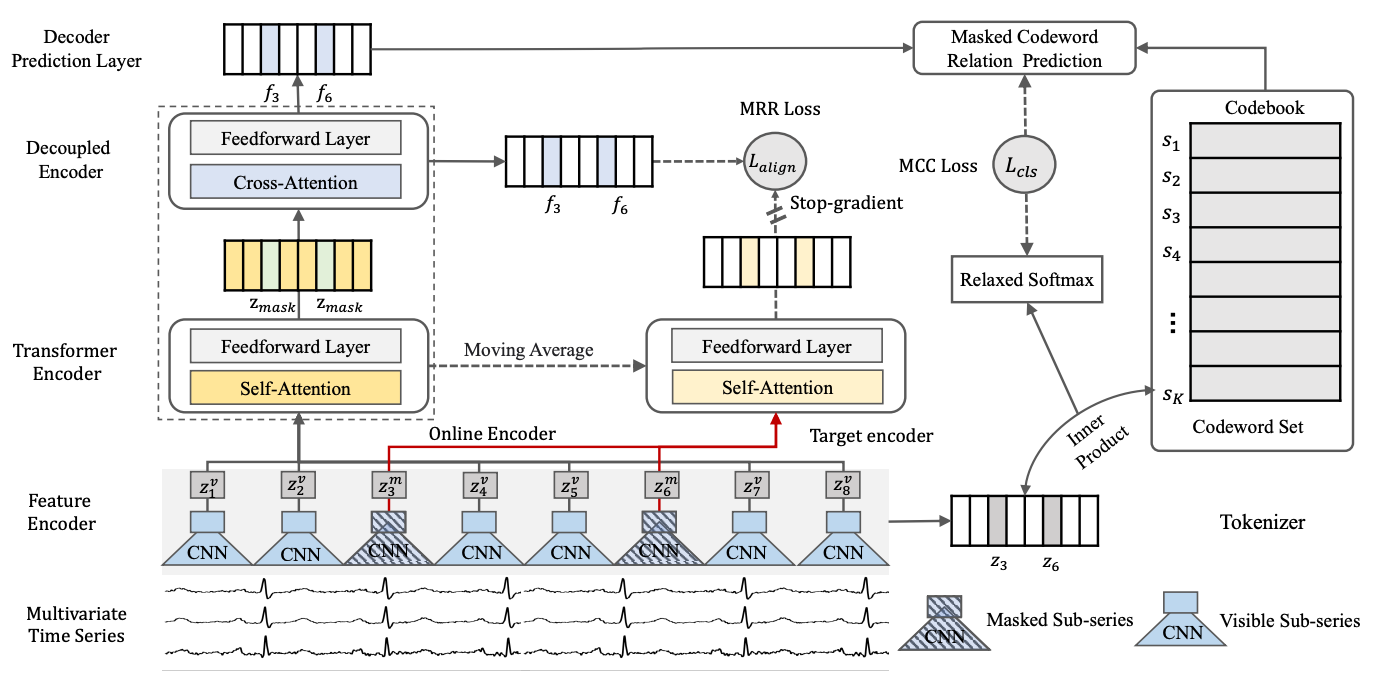

Self-supervised representation learning with decoupled masked autoencoders, learning enriched contextual representations through bidirectional encoding over sub-series.

Paper →

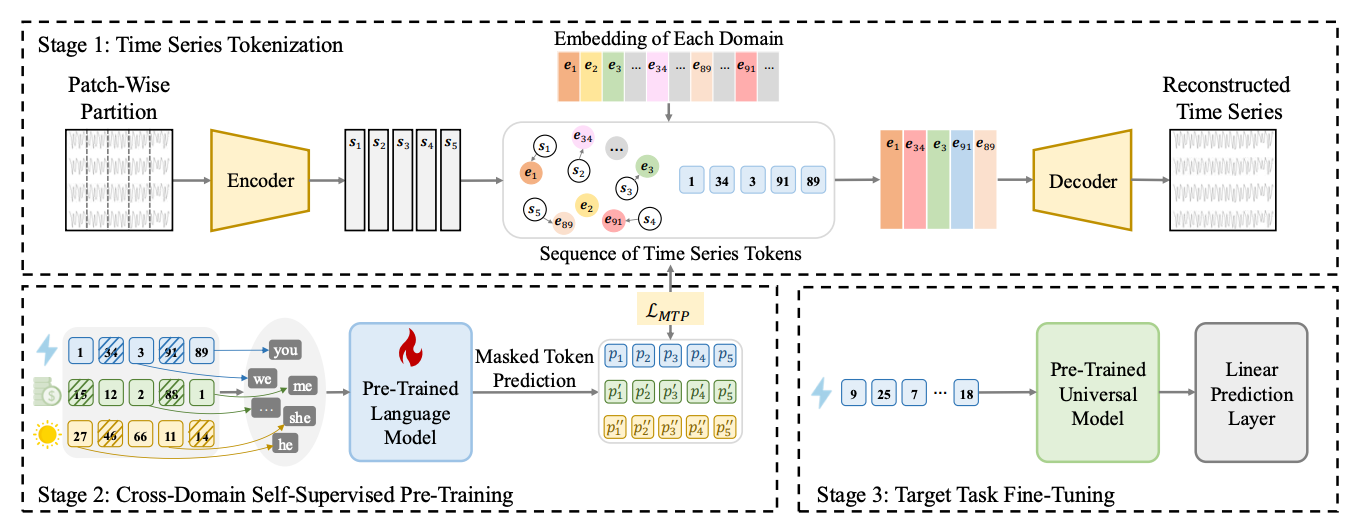

Cross-domain self-supervised pre-training with language models for transferable time series representations; vector quantization tokenization and corrupted-region recovery for classification and forecasting downstream tasks.

Code →

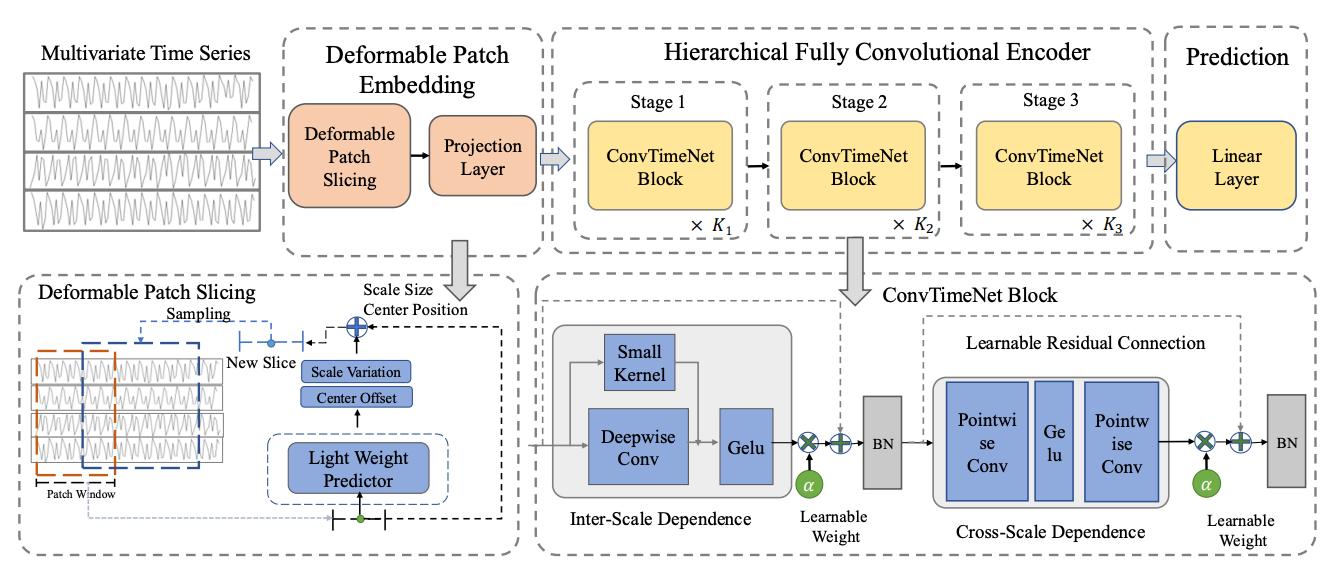

Hierarchical fully convolutional model with deformable patch layers for adaptive perception of local patterns and multi-scale dependency modeling.

Paper →

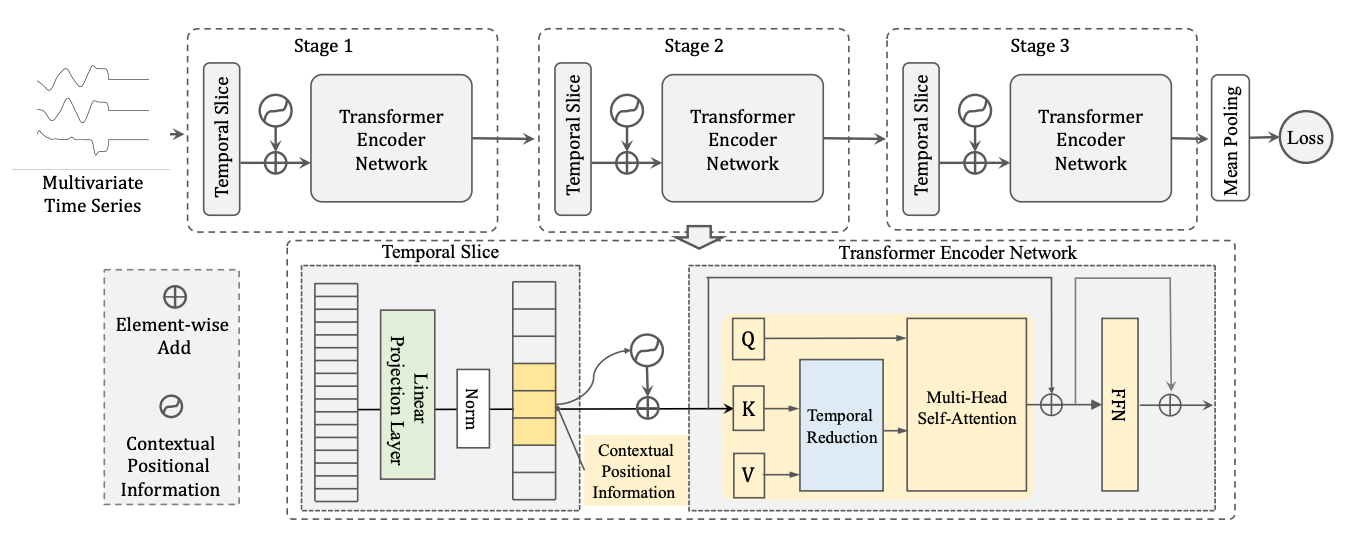

Hierarchical multi-scale representation for multivariate time series classification, capturing temporal dependencies at multiple granularities.

Paper →

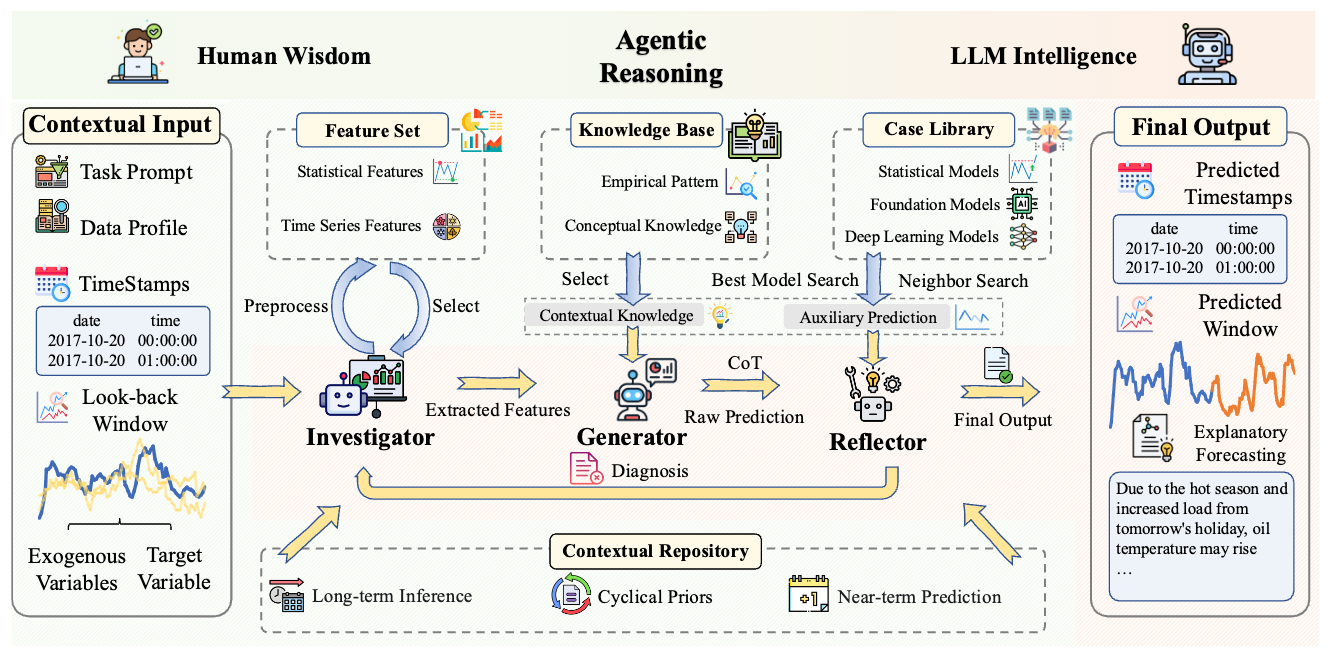

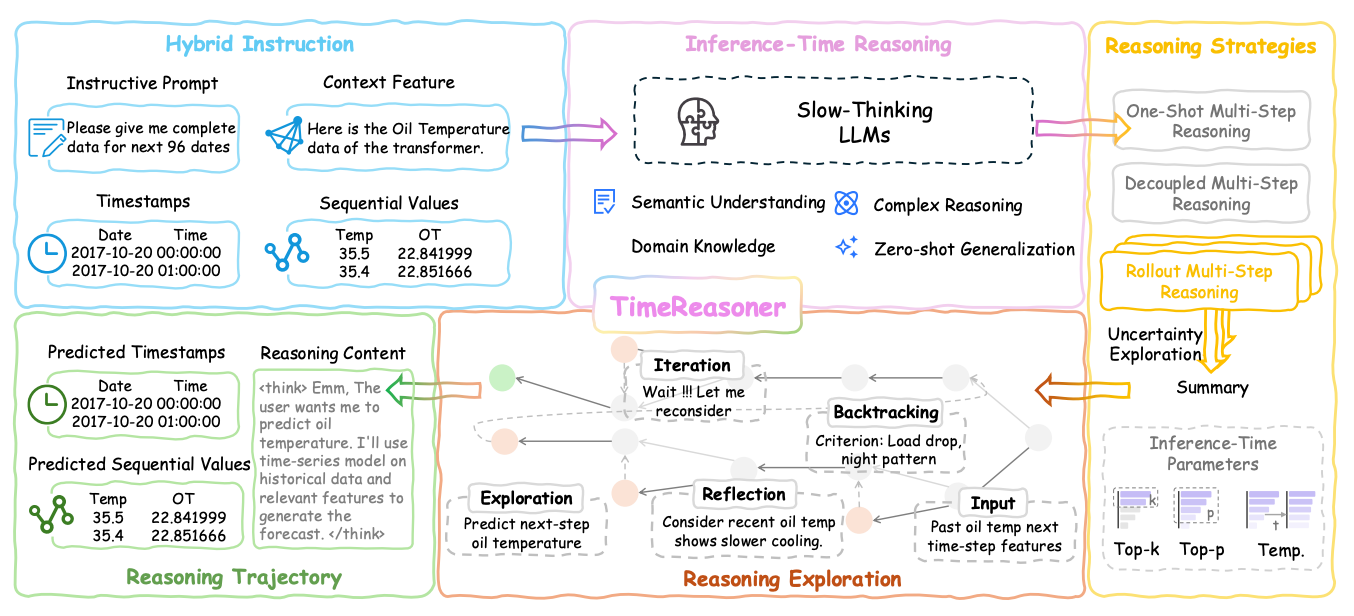

Interaction-driven agentic reasoning framework for cognition-inspired forecasting, organizing prediction into multi-stage workflow with context preparation and reflective evaluation.

Paper →

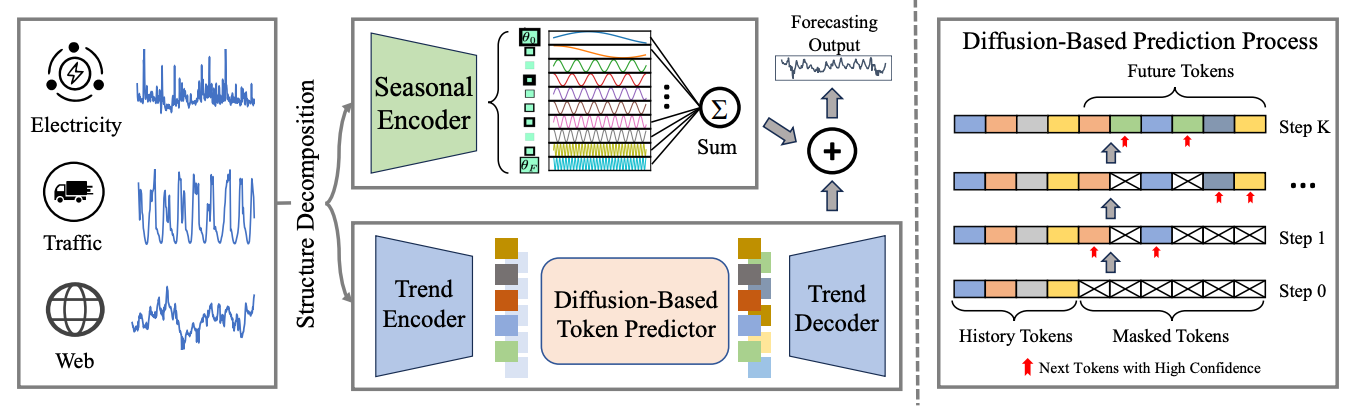

Structured decomposition and modular generation for cross-domain time series forecasting: decomposes into seasonal (lightweight projection with basis functions) and trend (semantic-aware tokenizer + masked discrete diffusion) components for generalization across domains.

Paper →

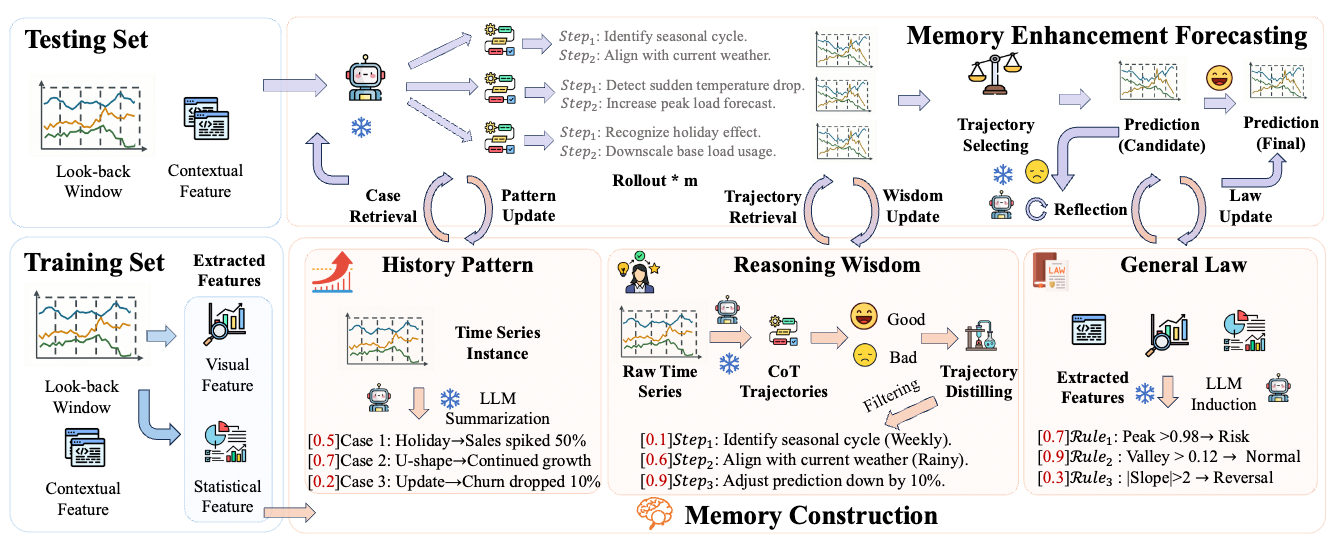

Memory-driven forecasting with experience-conditioned reasoning, learning historical patterns, reasoning wisdom, and general laws from training data.

Code →

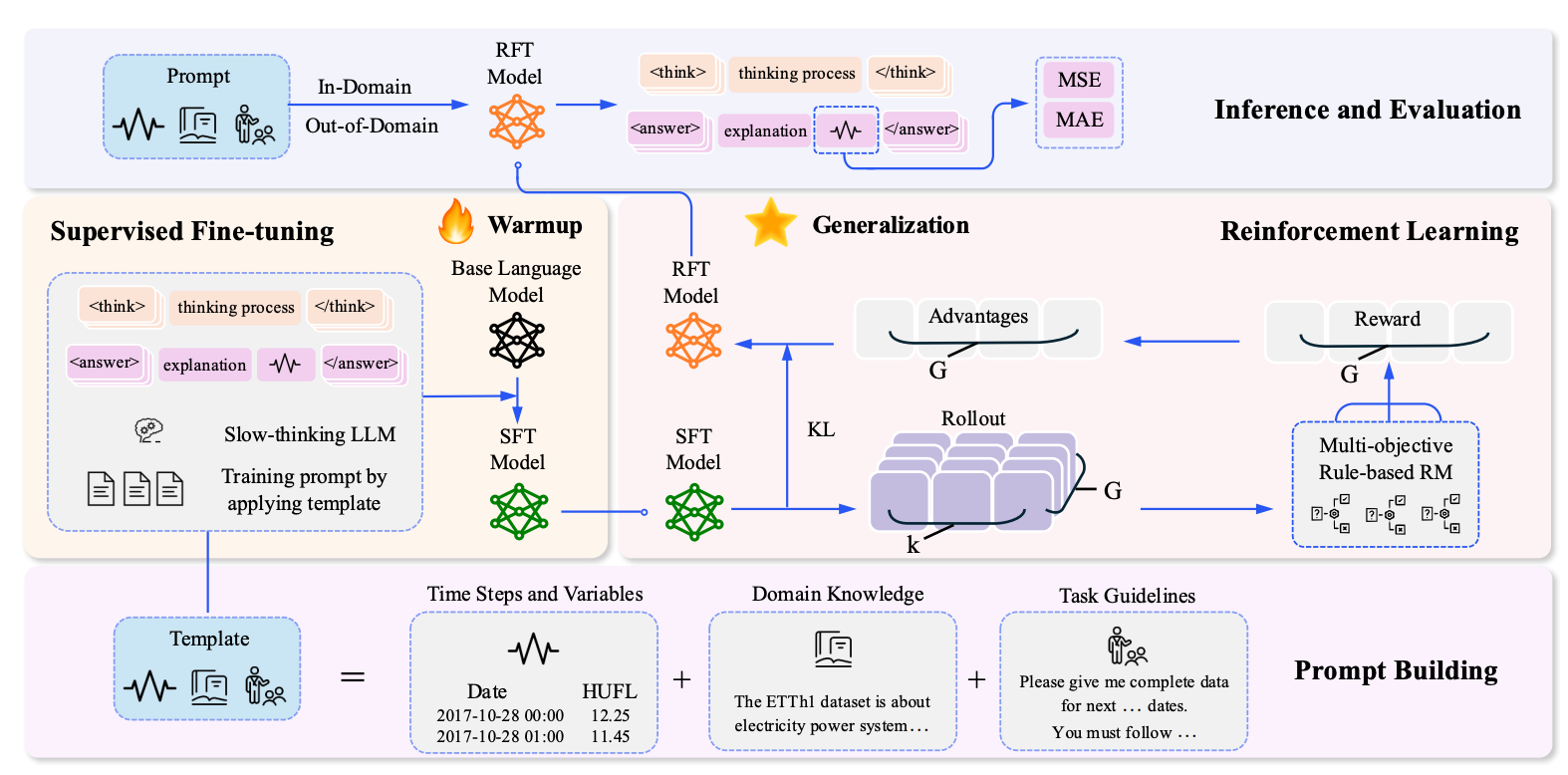

Slow-thinking approach with reinforced LLMs for time series forecasting, employing two-stage reinforcement fine-tuning to enhance multi-step reasoning ability.

Paper →

LLM-driven framework for context-aware forecasting via symbolic discretization, transforming continuous sequences into temporal tokens for unified representation.

Paper →

Empirical study on slow-thinking LLMs reasoning over temporal patterns, demonstrating non-trivial zero-shot forecasting capabilities in capturing trends and contextual shifts.

Paper →

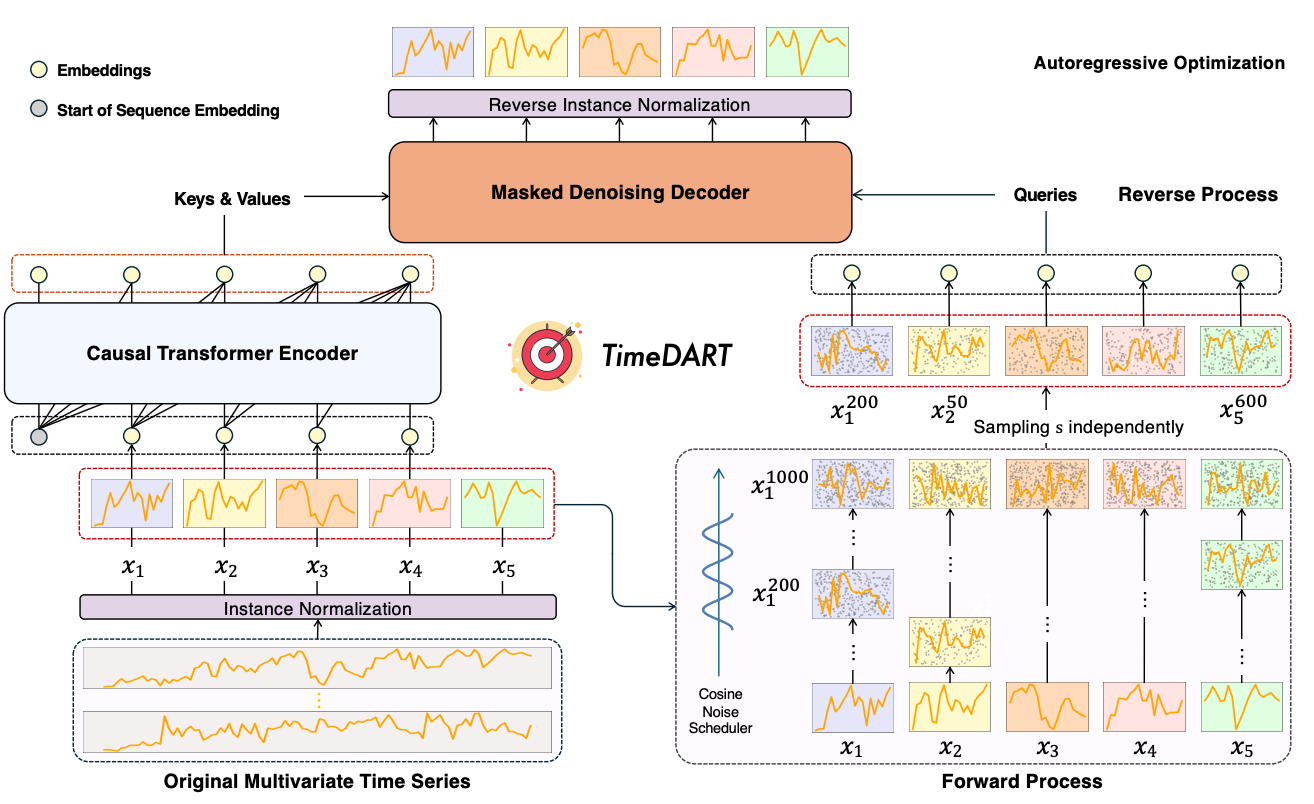

Diffusion autoregressive transformer for self-supervised time series representation, unifying causal transformer encoding with denoising diffusion for global and local patterns.

Paper →

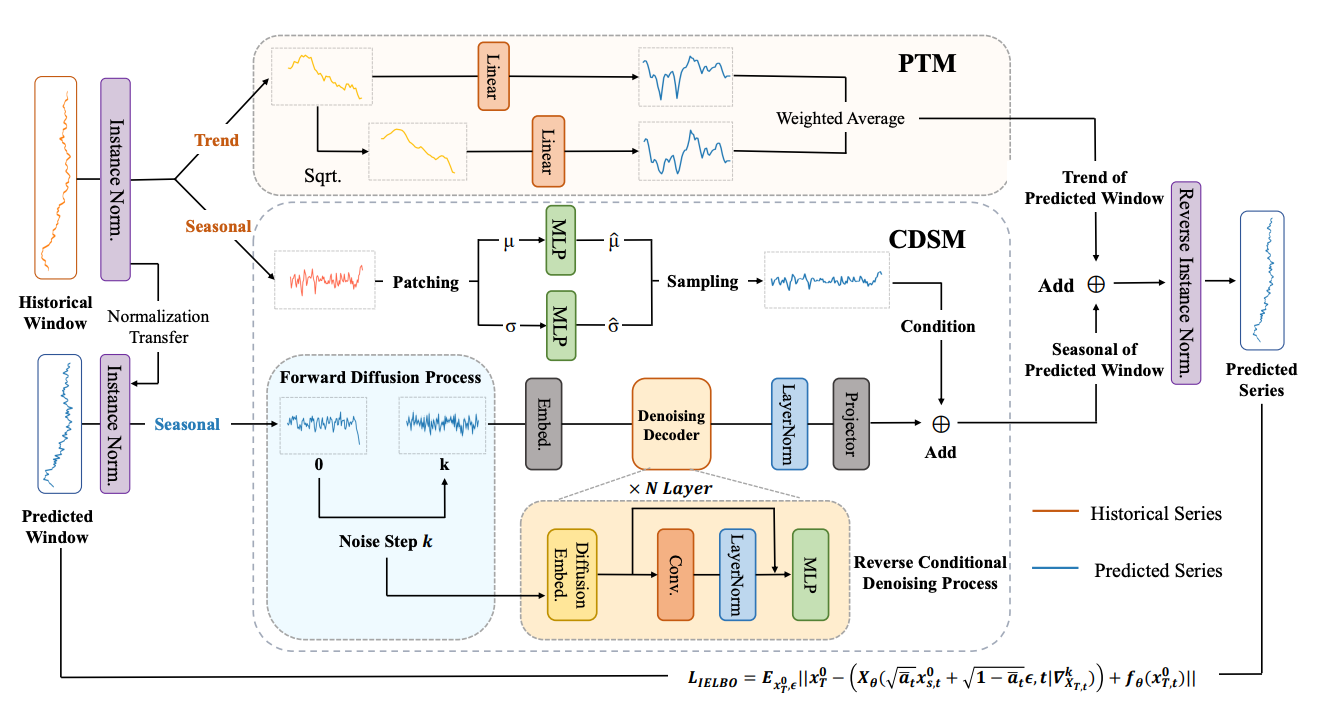

Flexible decoupled framework that decomposes time series into trend and seasonal components, employing conditional denoising (diffusion) for the fluctuating seasonal part and enhanced linear models for the smooth trend, trained end-to-end.

Paper →

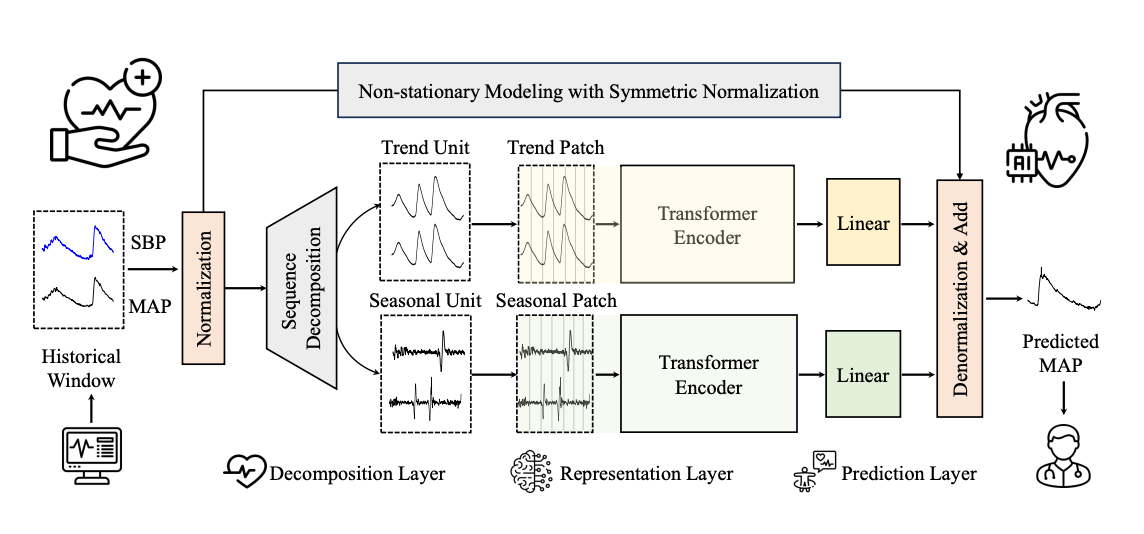

Hybrid multi-factor network with dynamic sequence modeling for early warning of intraoperative hypotension; decomposes physiological signals into trend and seasonal components with patch-based Transformers and symmetric normalization.

Paper →

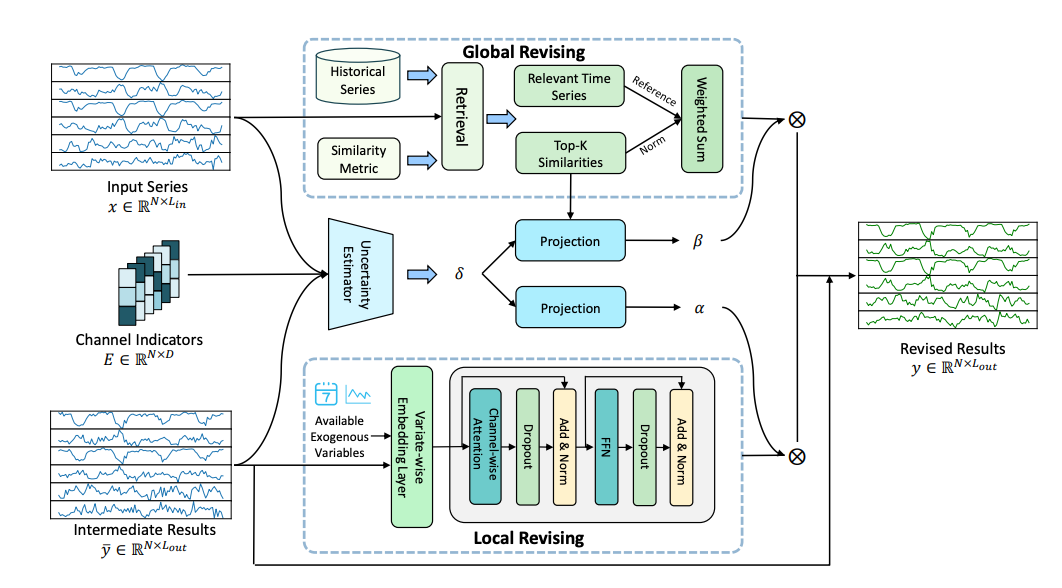

Instance-aware post-hoc revision framework for improving forecasting via identification and revision of biased instances using contextual information.

Paper →

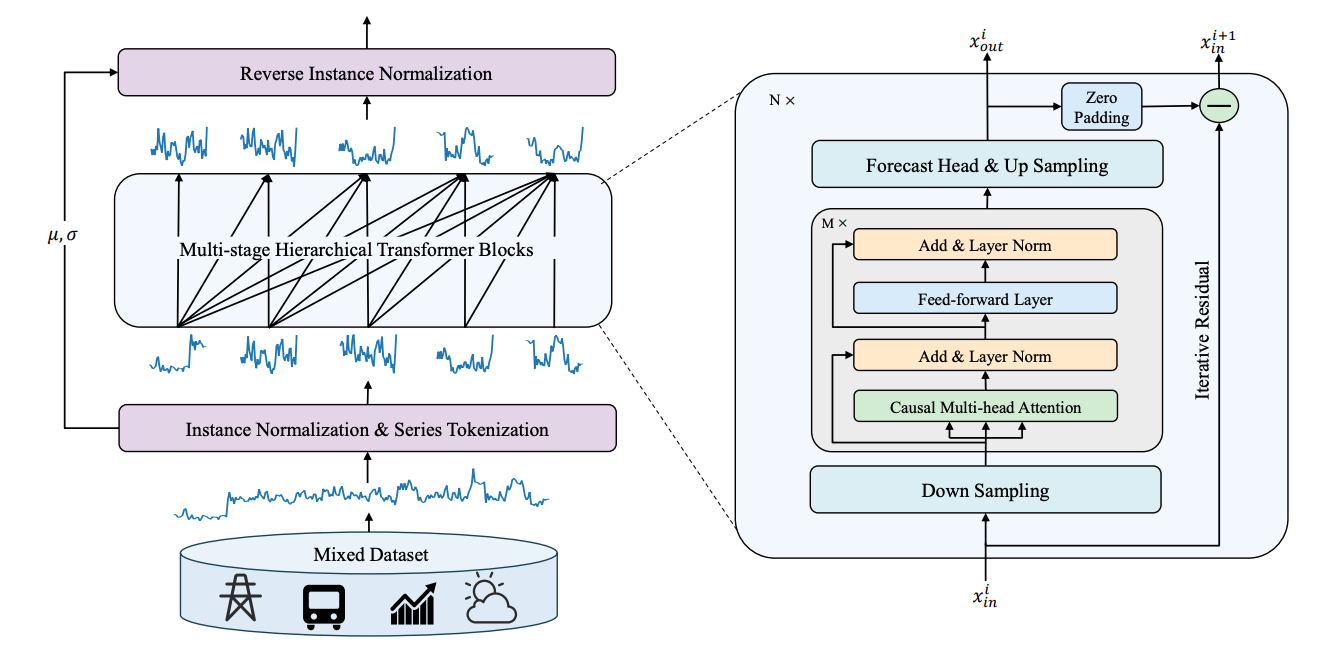

Generative pretrained hierarchical transformer for forecasting, employing mixed dataset pretraining and autoregressive generation for arbitrary horizon settings.

Paper →

Adaptive normalization for non-stationary time series forecasting from a temporal slice perspective, eliminating non-stationarity at local slice level.

Paper →

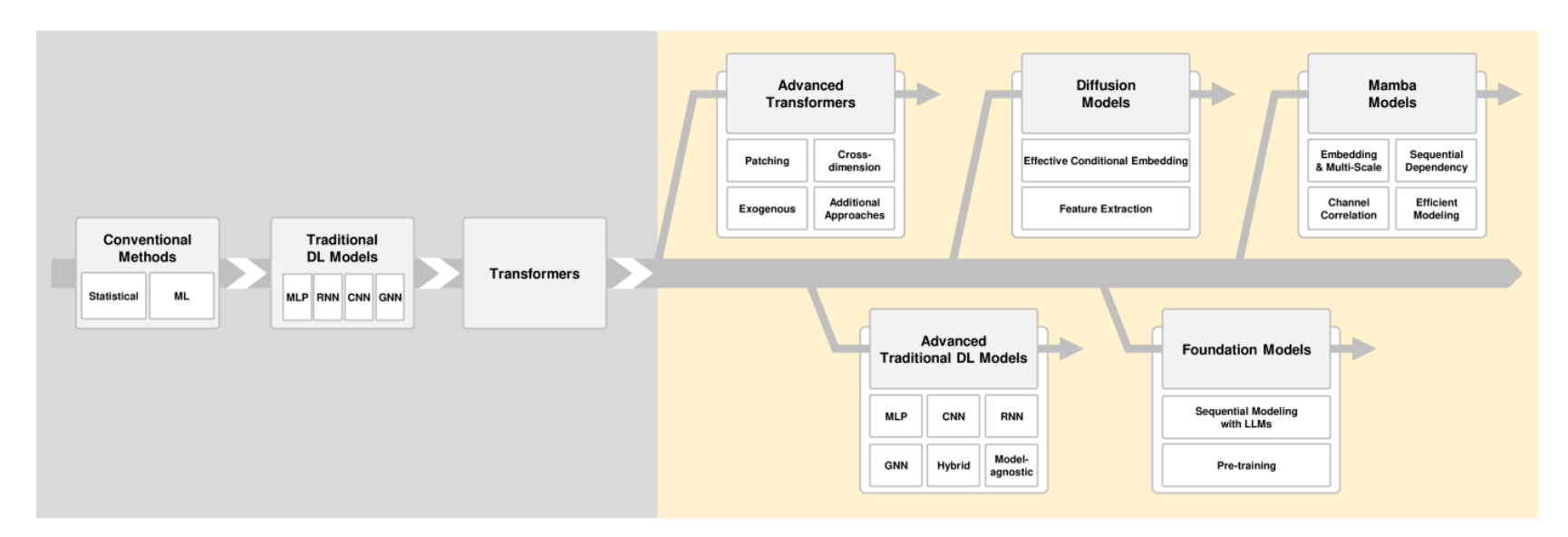

Examines key entities in TSF and their characteristics, introduces a general problem formulation and challenge analysis, proposes a taxonomy classifying methodologies from preprocessing and forecasting perspectives, and highlights emerging topics like transfer learning and trustworthy forecasting.

Paper →Timeline

Key publications from 2023 to 2026 across classification, forecasting, and representation learning.

2023

Introduced adaptive normalization for non-stationary time series forecasting, addressing distribution shifts through temporal slice-level normalization.

2023

Proposed hierarchical multi-scale representation for multivariate time series classification, establishing foundation for multi-granularity temporal modeling.

2024

Developed generative pretrained hierarchical transformer enabling single model for arbitrary horizon forecasting through mixed dataset pretraining.

2025

Awarded Best of WSDM for reformulating classification as multimodal generative task, demonstrating potential for universal foundation models in time series.

2025

Extends InstructTime with implicit feature enhancement for superior time series classification.

2025

Cross-domain pre-training with LMs for transferable time series representations via vector quantization tokenization and self-supervised recovery objective.

2025

Unified diffusion and autoregressive paradigms for self-supervised representation learning, capturing both global trends and local patterns.

2025

Enabled zero-shot classification through table understanding reformulation, achieving natural alignment with LLM semantic space.

2025

Interaction-driven agentic reasoning framework for cognition-inspired time series forecasting.

2025

Memory-driven forecasting with experience-conditioned reasoning from historical patterns and reasoning wisdom.

2025

Slow-thinking approach with reinforced LLMs for multi-step reasoning in time series forecasting.

2025

LLM-driven context-aware forecasting via symbolic discretization and unified semantic space.

2025

Model-agnostic framework for instance-aware post-hoc revision, effectively mitigating instance-level errors in forecasting.

2025

Demonstrated viability of pure convolutional models with hierarchical architecture and deformable patch layers for time series analysis.

2025

Hierarchical multimodal LLMs with semantic space alignment for time series classification, bridging temporal representations with generative reasoning.

2025

Flexible decoupled framework combining diffusion for seasonal component and enhanced linear models for trend, trained end-to-end.

2025

Hybrid multi-factor network for early warning of intraoperative hypotension via dynamic sequence modeling and trend-seasonal decomposition.

2025

Structured decomposition and modular generation for cross-domain time series forecasting: seasonal projection with basis functions and trend modeling via semantic-aware tokenizer and masked discrete diffusion.

2026

Advanced self-supervised learning with decoupled masked autoencoders, learning transferable representations through bidirectional encoding.

2026

Empirical study revealing slow-thinking LLMs' capabilities in temporal reasoning, paving way for reasoning-based forecasting paradigms.

—

Concepts, challenges, taxonomy, and future directions for time series forecasting research.

Resources

Converting time series to discrete tokens and leveraging pre-trained language models for classification and forecasting tasks.

Slow-thinking approaches and agentic reasoning frameworks that enable multi-step reasoning over temporal patterns.

Masked autoencoders, diffusion models, and hierarchical transformers for learning transferable time series representations.

In-depth analysis of time series forecasting concepts, challenges, architectural trends, and future directions.

We explore novel paradigms for time series analysis, from multimodal language modeling to reasoning-based forecasting. Our research addresses fundamental challenges in temporal data understanding, including non-stationarity, long-term dependencies, and contextual integration.

Bridging numerical sequences with contextual features through symbolic discretization and alignment strategies for enhanced understanding.

Developing slow-thinking approaches and memory-driven frameworks that enable explicit reasoning over temporal dynamics and dependencies.

Self-supervised learning methods that learn generalizable patterns across diverse time series domains and applications.